Jun 8, 2022

Databases! Pandemic! Funding! A look back and a look forward

Andy Pavlo

The start-up game is rough. Fellow co-founders Dana Van Aken, Bohan Zhang, and I took a gamble on trying to build a start-up using ML for database tuning. And if launching the company during a pandemic wasn’t difficult enough, trying to raise a funding round amid dicey economic and political news certainly made things harder.

But these issues are why we were especially excited to announce last month that OtterTune raised $12 million in Series A funding, co-led by Intel Capital and Race Capital, with Accel participating.

We thought it would be helpful to others if we reflected on OtterTune’s journey from an academic research project to a thriving start-up. We also want to talk about the challenges of creating a remote-first company.

Academic spin out

We decided to form the OtterTune company right before the pandemic in February 2020. But when the US economy shut down in March 2020, venture capital funding wasn’t easy to come by. We ended up taking angel funds from our database friends. We got some bad advice from somebody in Pittsburgh and did not raise enough money initially, but we sorted that out later.

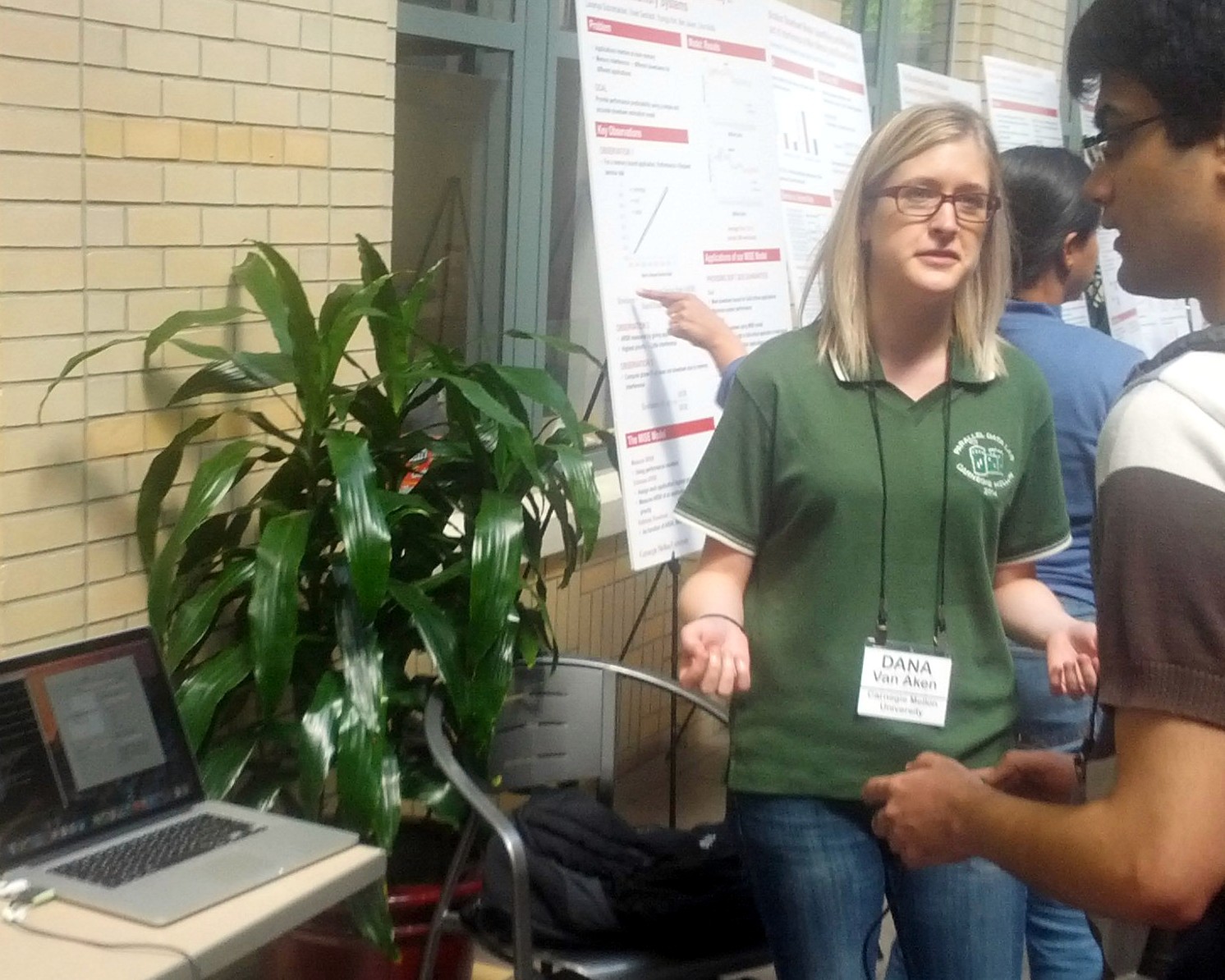

Dana Van Aken presenting an early prototype of OtterTune at Carnegie Mellon University in 2015.

The next challenge that we faced was how to move the project from the university to the start-up. This migration was seemingly straightforward at first because we did not patent anything related to OtterTune at Carnegie Mellon University (CMU) during Dana’s Ph.D. We just said that the OtterTune academic project ended, and we switched our focus to the new OtterTune start-up.

I believe that patents are valuable when used correctly, but I decided not to pursue them based on advice from Mike Stonebraker. Mike told me long ago that not having any patents makes it easier to spin out a research project from the university as a company because you don’t have to deal with lawyers.

But one problem that we did not anticipate was how to handle the source code. We weren’t planning on using the academic OtterTune source code, and the original algorithms are publicly available in our academic papers.

But when we raised the seed round with Accel in 2021, the VC lawyers were concerned that we were starting a company based on our research, but there was no agreement with the university about what IP we were allowed to use. They wanted some assurance that the CMU would not have some claim over our new software in the future.

Although OtterTune’s academic code was open-source, one mistake that we did make was to switch the license of the source code of OtterTune from Apache to MariaDB’s Business Source License (BSL) in 2019. We did this because we had some sketchy conversations with somebody at a major cloud vendor about their plans to use OtterTune.

This person told Dana and me that they wanted our help getting our software running for them so that they could “make a ton of money” with it. But they said that they could not provide us with any research funds for our time and effort. We said fuck that and changed the source code’s license to the BSL, which was enough to scare them away.

This switch to the BSL caused a problem with the VC lawyers. They felt that the BSL would restrict us in the future. We ended up having to license the source code from CMU even though we never used it. If I had to do it over again, I would have left the code with the Apache license and ignored the cloud vendor’s requests for help because their engineers never figured out how to make OtterTun’s ML algorithms work on their own. Again, applying ML to databases is hard.

I greatly appreciate working with our friends at Accel (Ping Li) in guiding us through the early stages of our company. The first question that other VCs ask is when I will leave CMU (spoiler: I’m not). Ping didn’t bother me with that noise and instead focused on what we needed to get the product to market and scale the company.

Ramping up, remote first

With our new cash money in 2020, we set out to find our first set of hires. One of the aspects of being a professor that I greatly enjoy is helping my students get jobs at database companies. But now, it was my time to hire them for myself.

OtterTune asynchronous pizza party in April 2021.

We reached out to the best students from my first eight years at CMU and, luckily, were able to hire four of them right away. Today, roughly 60% of the OtterTune team are from CMU, including myself and the other co-founders (Dana, Bohan). CMU has the best Computer Science students in the world, so why wouldn’t we try to hire them? Many other people, such as Mark Zuckerburg, have come to the same conclusion.

Since the company started at the height of the pandemic, we had no plans to rent an office, and the company was remote first from the beginning. The “work from home” freedom had some interesting outcomes and challenges. One unexpected trend for me is that everyone we hired who lived in the Bay Area wanted to move after joining us. Some moved back to Pittsburgh, and others moved to Seattle. This migration trend during the pandemic has been reported widely.

Luckily, since many of our first hires were former CMU-DB students, we had already worked with each other before. They were also familiar with my database-centric lifestyle, so I didn’t have to explain my eccentricities. But for the employees who were not previously my students, we had to develop ways to make them feel included. One thing that we did was get everyone a Nintendo Switch and hold weekly gaming competitions. We also encouraged everyone to attend the weekly database tech talks that I hosted.

Another thing we did was order everyone pizza for themselves at home when we hit a significant milestone. These things may sound trite to some, but I like to think they helped us keep it together in the formative months of the company.

What’s next

Despite pandemic-induced challenges, our raft continues to grow. We are expanding at a rapid pace. Two more talented CMU students joined us as interns in 2021 and returned full-time after graduating in 2022. We are also excited to work with our new friends at Intel Capital (Nick Washburn, Assaf Araki) and Race Capital (Alfred Chuang).

I am more convinced now than when OtterTune was just an academic project that using ML for knob tuning provides huge improvements for many databases. And Gartner agrees, based on their inclusion of OtterTune in the 2022 Cool Vendors in Augmented Data Management report.

But there are steep challenges to getting people to set up their databases to achieve that benefit. And then, it is challenging to convey to them how much we made things better, especially in production environments with workloads that vary during the day/week. This round of funding enables us to expand OtterTune’s automation capabilities for databases beyond knob tuning (e.g., index tuning, query tuning, and other optimizations).

So stay tuned this year for all the great things we are adding to OtterTune to provide you with peace of mind for your PostgreSQL and MySQL databases. You should also get amped for new OtterTune Records releases.